I wrote a script for GPT-3 to take a multistate bar exam — and it both passed and failed in minutes

The algorithm easily passed a 50-question MBE, but it failed a full NCBE one.

If you’ve been on the Internet these past few weeks, you have likely encountered news of GPT-3 and ChatGPT. These large language models (LLMs) have been used to algorithmically create college essays, fight internet telecommunication bills, create fictional romance stories, write functional malware, and so much more.

Given this growing interest in the algorithmic capabilities of ChatGPT and GPT-3, I had an idea: Could an LLM like GPT-3 pass a multistate bar exam (MBE)?

So, I wrote a script to find out.

HOW I CHOSE TO TEST GPT-3 WITH MBE QUESTIONS

To become a lawyer in most U.S. jurisdictions, you must take and pass a state’s bar exam. The bar exam is typically split into two portions: a series of essays and a series of multiple choice questions. The latter is known as the MBE portion.

The MBE is a six-hour, 200-question multiple-choice exam developed by the NCBE and administered twice a year — in February and July. Each MBE question opens with a fact pattern and a legal question, followed by four possible answers. Typically, two answers sound correct, but only one is legally correct.

To pass the MBE, a test participant must get at least 60% of the questions correct. Which might sound ridiculous to those familiar with the usual U.S. test score curve of 60% being well into the failing range, but it’s not easy achieving that 60%. Thousands of individuals take the MBE, yet the average test taker gets only 66% to 70% correct.

And this is exactly why I wanted to test an LLM on MBE questions. Passing requires discerning important facts from useless ones, understanding the question’s legal context, and avoiding the pitfall of picking the answer that sounds correct yet features one key aspect that makes it wrong.

To that end, between ChatGPT and GPT-3, I decided to test GPT-3 with MBE questions because ChatGPT excels more at providing textual outputs that read as if written by a human. GPT-3, on the other hand, is a larger and more powerful processing model, yet it is not specifically designed for conversation modeling.1

Meaning, GPT-3 is more readily capable of answering multiple choice questions, whereas ChatGPT would be more appropriate for an essay-based test.

HOW I SCRIPTED GPT-3 TO ANSWER MBE QUESTIONS

I wrote a script based off Spreadsheet Magic, which is a simple add-on to Google Sheets that, given only row and column headings for a table, enables GPT-3 to fill out the table with no further input from the user. The script query uses the row and column headings to query GPT-3 with a request that looks something like “What is the [column_heading] of [row_heading]?”

This allows Spreadsheet Magic to request output information from GPT-3, such as numeric and subjective facts about different nations, information about famous scientists, or facts about different sports.

Using this foundation of requesting GPT-3 to make determinations based on specific spreadsheet columns and rows, I modified the query to instead request that GPT-3 read an MBE bar exam question and then choose the best answer based on U.S. law.

The end script result looks like this:

var API_KEY = "APIKEYHERE";

function onOpen() {

var spreadsheet = SpreadsheetApp.getActive();

var menuItems = [

{name: 'OK, scary machine take the bar exam', functionName: 'gpt3fill'},

];

spreadsheet.addMenu('GPT3', menuItems);

}

function _callAPI(prompt) {

var data = {

model: "text-davinci-002",

prompt: prompt,

temperature: 0.1,

max_tokens: 500,

top_p: 1,

best_of: 3,

frequency_penalty: 0,

presence_penalty: 0,

};

var options = {

'method' : 'post',

'contentType': 'application/json',

'payload' : JSON.stringify(data),

'headers': {

Authorization: 'Bearer ' + API_KEY,

},

};

response = UrlFetchApp.fetch(

'https://api.openai.com/v1/completions',

options,

);

return JSON.parse(response)['choices'][0]['text'].trimStart()

}

function gpt3fill() {

var spreadsheet = SpreadsheetApp.getActive();

var range = spreadsheet.getActiveRange();

var num_rows = range.getNumRows();

for (var i=1; i<num_rows + 1; i++) {

question = range.getCell(i,1).getValue();

option = range.getCell(i,2).getValue();

fill_cell = range.getCell(i, 3);

var prompt = "Question: " + question + "\n\n Options: " + option + "\n\n Choose the best option based on US law:";

var response = _callAPI(prompt);

fill_cell.setValue([response]);

}

}THE FIRST TEST: A MINI MBE FROM BARPREPHERO.COM

To start, I grabbed 50 MBE questions from BarPrepHero.com, which is a bar exam preparation platform designed to help people pass the bar. The service provides a free mini MBE test as an introduction to its services, and I wasn’t about to spend money on a full NCBE MBE since I hadn’t even tested the script.

I copy-pasted the answers into my Google Sheet, made the appropriate adjustments to headers and rows, and then fired off the script. And as you can see, GPT-3 started cranking out answers:

It takes the average human about an hour and a half to complete 50 MBE questions, but GPT-3 managed to complete the 50-question set in about a minute.

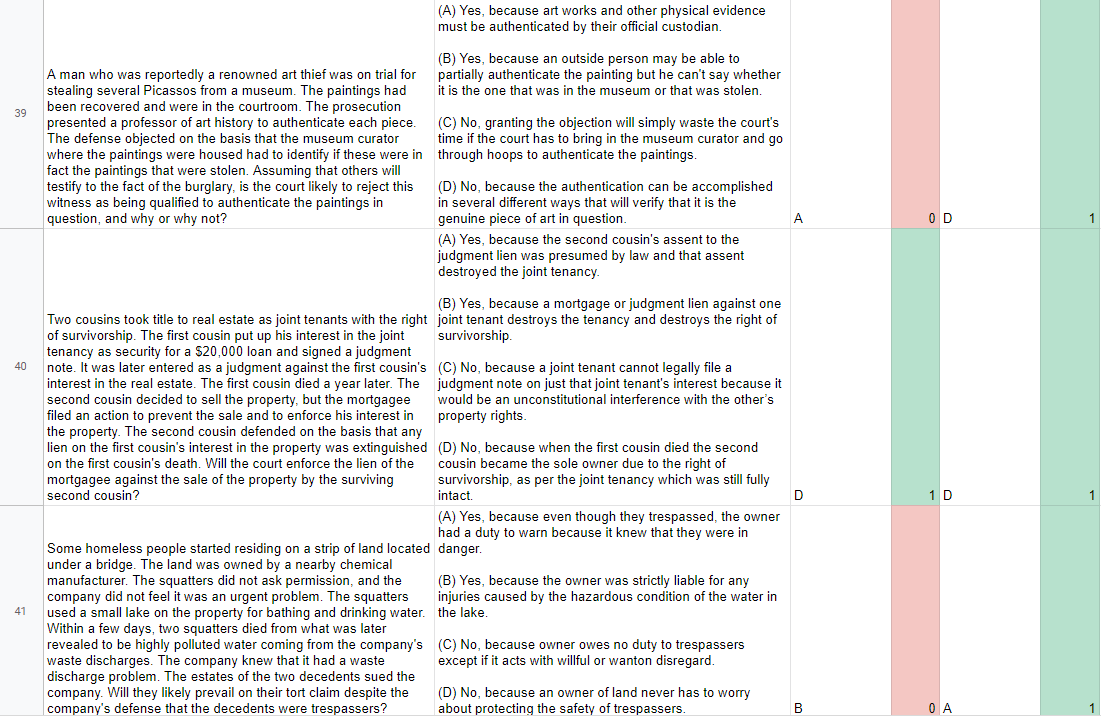

And here’s the interesting part: GPT-3 got 36 out of 50 questions correct — meaning it achieved a score of 72% correct and easily passed on its first try. To ensure this wasn’t a fluke, I fired off the script again. This time it achieved 37 out of 50 questions correct, with much the same answers being correct as the first time.

However, the algorithm for some reason had changed correct answers to wrong answers — and vice versa — which means the algorithm may be unsure or blindly guessing some answers.

To account for this, I asked GPT-3 to run the script 10 times and then input into Google Sheets the average answer it achieved for each question. This final test resulted in an even further score increase, with GPT-3 getting 39 out of 50 questions correct — for a passing score of 78%.

THE SECOND TEST: THE FULL MBE FROM NCBE

With the initial BarPrepHero.com material demonstrating that GPT-3 could easily pass BarPrepHero.com’s 50-question set, I decided to throw down cash on an official NCBE MBE.

The idea being, maybe BarPrepHero.com’s initial exam material is easier than the actual MBE, since the service wants to encourage users to engage further with the company’s bar prep program.

Again, I copy-pasted the NCBE MBE questions into a new Google Sheet and fired off the script — and GPT-3 finished the full NCBE MBE in three minutes and six seconds.

Given the overwhelming success GPT-3 had with the previous question set, I expected to see similar results from the NCBE question set. However, much to my disappointment, GPT-3 absolutely bombed the NCBE MBE.

Out of 200 questions, GPT-3 managed to get only 96 of 200 correct, which is well below what is required to pass.

And no matter how many times I ran the script, GPT-3 never improved by more than one or two points. So, although GPT-3 was successful at answering BarPrepHero.com’s MBE questions, the algorithm failed to carry that success over to an official NCBE MBE.

THE TAKEAWAY

For a human to pass the MBE, they must typically achieve a four-year undergraduate degree, a three-year law school degree, and spend the two or three months leading up to the exam intensely cramming with a bar prep program. It’s a grueling experience.

Yet, GPT-3 managed to pass a mini MBE on its first try and then achieve about 50% correct on a full MBE. While it nonetheless failed on the second try, the score is notable because it’s higher than mere blind chance, as blind chance would more likely see a score around 25% correct. So, GPT-3 is demonstrating a surprising amount of MBE competence.

And it’s important to remember: GPT-3 was not designed to take the MBE. It’s a comprehensive algorithm capable of adapting to a wide range of queries. If someone were to, say, utilize GPT-3 as a foundation upon which to build a model designed specifically for passing the MBE or providing legal advice, then one would expect the scores to drastically increase.

But for now, it’s wildly impressive what this generalized AI can do despite zero specialization in the area of law. And that alone is worth acknowledging.